Intelligence Is Not Unique

Deep learning has succeeded in creating intelligent systems by implementing prediction as the core function

We know the theoretical path to artificial general intelligence, now it’s just an engineering problem.

The universal code to producing intelligence has been cracked

“How did we get to the doorstep of the next leap in prosperity?

In three words: deep learning worked.

In 15 words: deep learning worked, got predictably better with scale, and we dedicated increasing resources to it.

That’s really it; humanity discovered an algorithm that could really, truly learn any distribution of data (or really, the underlying “rules” that produce any distribution of data). To a shocking degree of precision, the more compute and data available, the better it gets at helping people solve hard problems. I find that no matter how much time I spend thinking about this, I can never really internalize how consequential it is.

There are a lot of details we still have to figure out, but it’s a mistake to get distracted by any particular challenge. Deep learning works, and we will solve the remaining problems. We can say a lot of things about what may happen next, but the main one is that AI is going to get better with scale, and that will lead to meaningful improvements to the lives of people around the world.”

— Sam Altman

It’s only a matter of time before we wire this universal predictive code into every intelligent behaviour and goal.

The idea that pure next-token prediction can scale to full intelligence crystallised in Ilya Sutskever’s interview provocatively titled “Why next-token prediction is enough for AGI.” Although he never states that claim outright, he does throw down a gauntlet: “I challenge the idea that next-token prediction cannot surpass human performance… to predict a token well you must understand the reality that produced it”.

Jeff Hawkins laid the conceptual groundwork two decades earlier in On Intelligence. Observing that the neocortex looks strikingly uniform, he argues it must run a single algorithm: “Since these regions all look the same, perhaps they’re doing the same computation” (recapped here). He frames the brain as a world-model that constantly tests its forecasts against reality: humans out-predict other animals because we can handle more abstract, longer-range patterns.

In A Thousand Brains, Hawkins pushes further: “Prediction isn’t occasional; it’s the brain’s default mode. When predictions match reality, the model is good; mismatches trigger learning.” This view echoes Andy Clark’s recent *The Experience Machine,* which recasts mind as simulation engine: “The bulk of what the brain does is learn and maintain a model of body and world.” Clark’s core claim is that the brain first casts a world model and lets sensory data edit it. Rather than (1) taking in information through our senses and (2) processing that sensory information to create a world model to experience and act upon, Clark proposes that minds (1) create a model of the world, and (2) update that model with information from the senses, if reality differs from predictions.

Across these perspectives the narrative converges: master prediction, and you master intelligence itself.

The puzzle of intelligence

Intelligence is hard to pin down1, but we know it when we see it. AI systems are clearly smart in some way, but what does "intelligence" actually mean? Are there different roads to becoming intelligent?

“Viewed narrowly, there seem to be almost as many definitions of intelligence as there were experts asked to define it.”

— R. J. Sternberg quoted in (Gregory, 1998)

When we call someone intelligent, we're usually commenting on their ability to successfully achieve certain tasks:

A high IQ indicates good performance on broad problem-solving tests

Being "book smart" means effectively using knowledge from books

Emotional intelligence involves accurately reading and responding to emotions

Street smarts reflect the ability to navigate real-world social dynamics successfully

In each case, ‘intelligence’ is achieving goals in specific environments.

“Intelligence measures an agent’s ability to achieve goals in a wide range of environments.” — Shane Legg (2008)

The evolutionary nature of intelligence

This reveals something important: We have various definitions and types of intelligence precisely because humans possess countless different goals, shaped by our environmental challenges (evolutionary pressures).

Intelligence didn't appear suddenly—it evolved over time as living things faced challenges in their environments. We developed different foundations of intelligence as a result of our need to survive and adapt to our environments.

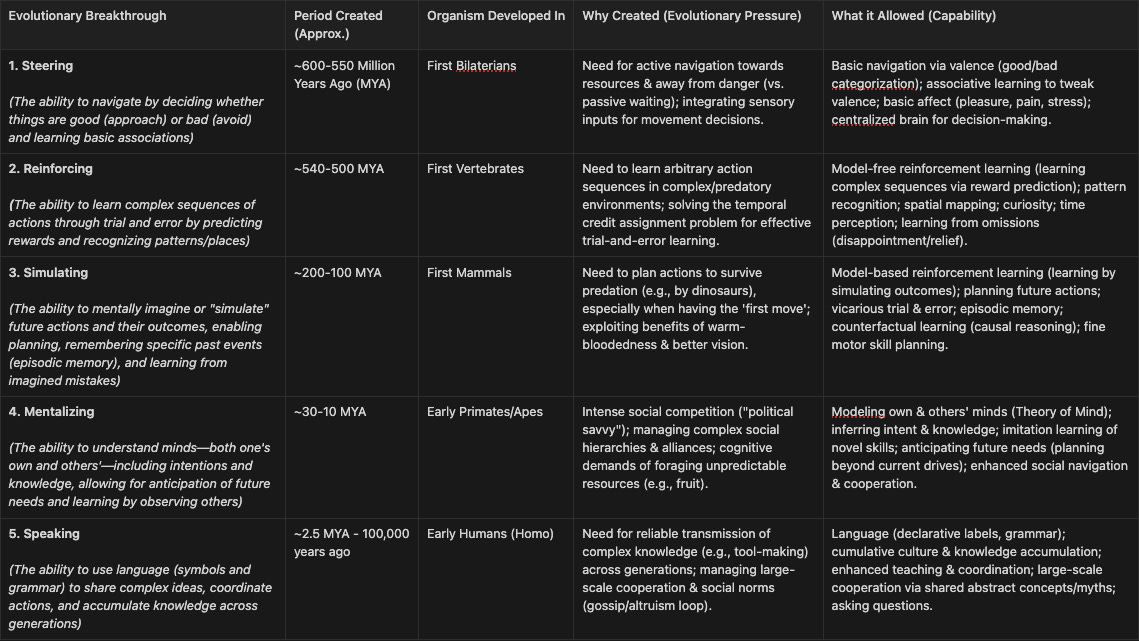

A Brief History of Intelligence shows us that intelligence is a layered toolbox of adaptations—each layer answering a specific evolutionary challenge, then becoming a platform for the next.

Each layer widened the capability horizon of humans and allowed us to become the dominant species on Earth.

Intelligence is Accurate Prediction

But each layer was simply increasing the power and sophistication of the one universal ability required for intelligence: accurate prediction2.

Human intelligence – and indeed any intelligent system – can be viewed as fundamentally predictive in nature.

“There is a fundamental link between prediction and intelligence”

— Demis Hassabis

Your brain is constantly predicting what will happen next3:

As you walk, your brain predicts the feeling of your foot touching the ground

When reading, it predicts the next likely word

In conversation, it anticipates what others might say or do

Intelligence emerges from building accurate models of the world that let us predict outcomes and respond appropriately.

When viewed through this lens, it’s obvious that intelligence is not unique. It is simply the process through which any being (human, animal, or digital):

Accurately models reality

Predicts what will happen

Responds to those predictions in order to survive (or in the case of mimetic, status driven humans: succeed)

Your brain runs continuous simulations of reality:

The neocortex predicts sensory information from the world around you

If predictions match reality, you don't notice anything unusual

If predictions are wrong (like expecting solid ground but finding a hole), your attention immediately focuses on the error

This prediction system has been refined through evolution:

Simple organisms predicted basic neural patterns

Vertebrates learned to predict rewards

Mammals with neocortexes learned to predict everything about their environment

All intelligent behaviour reduces to minimising the gap between an internal model and reality. We refine the model (learning) or act on the world (control) until error evaporates.

That constant loop of predict → compare → correct is the essence of intelligence: building predictions good enough to achieve goals across situations.

By relentlessly improving how well our models predict, we are directly on the path to AGI

Many experts4 now believe we've discovered the fundamental architecture for achieving artificial general intelligence. The breakthrough success of transformer models like GPT, Gemini, and Claude has demonstrated that intelligence can emerge from a relatively simple underlying process: prediction applied to rich world models.

“How did we get to the doorstep of the next leap in prosperity?

In three words: deep learning worked.

In 15 words: deep learning worked, got predictably better with scale, and we dedicated increasing resources to it.

*That’s really it; humanity discovered an algorithm that could really, truly learn any distribution of data (or really, the underlying “rules” that produce any distribution of data). To a shocking degree of precision, the more compute and data available, the better it gets at helping people solve hard problems. I find that no matter how much time I spend thinking about this, I can never really internalize how consequential it is.”

We haven’t created intelligence for all economically valuable human tasks, but we now possess the recipe to do so: a universal prediction engine (self-supervised transformers + reinforcement learning) that scales with data and compute. What remains is feeding that engine richer experience and structuring it to build world and agent models, not just next-token guesses.

The Missing Piece: World Models and Grounded Understanding

If both brains and current AIs are prediction machines, why are humans still so much more generally intelligent? The answer lies in the gap between current AI systems and human intelligence5. While AI excels at prediction within narrow domains, it lacks the full, rich world model that humans possess. The brain's predictions are grounded in a lifetime of multimodal experience in a complex physical and social world; today's AI's predictions often rely on relatively shallow training data.

A 5-year-old child has a robust model of reality: intuitive physics, basic psychology, spatial navigation, and language. This world model is richly grounded through manipulating objects, playing, and interacting with people. By contrast, even state-of-the-art LLMs have never felt gravity or truly understood what words like "coffee cup" refer to in a sensorimotor sense. They predict text patterns but don't connect words to tangible reality, leading to assertions that are physically absurd.

Even reinforcement learning agents learn in limited domains. They lack a unified model of physics, chemistry, and society. Humans integrate diverse knowledge in one brain; AI is usually trained for single tasks. This siloed learning prevents AI's predictions from generalizing beyond its training distribution. Humans can infer outcomes in novel situations through common sense—we know an unsupported glass will fall and break, not because we've seen that exact scenario, but because our world model predicts it.

The key missing component for AI is accurate and richly grounded world models. We need AI that learns like a child, through multi-sensory, embodied experience. This is what the "Era of Experience" advocates: letting AI agents interact with the world to gather data that grounds predictions in reality. Recent efforts move in this direction: robots learning through interaction, virtual agents in 3D simulations, and multimodal models integrating multiple senses.

Human predictive models also incorporate causality—we infer cause and effect and can imagine interventions. Many AI systems excel at statistical prediction but don't represent causality explicitly, making them brittle when conditions change. Building AI that understands causal relationships is crucial for general intelligence.

In closing, while prediction mechanisms are well-developed in AI, what's lacking is the comprehensive model of the world in which those predictions live. The brain's prediction machine benefits from millions of years of encoded evolutionary priors and bodily experience; AI's prediction machines are still somewhat blank slates trained on narrow data slices. Bridging this gap requires more holistic learning: integrating across modalities, tasks, and time.

Once an AI does have a richly grounded world model, its prediction-based algorithms could make it incredibly capable—efficiently predicting outcomes, planning with fewer mistakes, adapting with human-like ease, and exceeding human foresight in many domains. The machinery of prediction is ready—what's needed is the kind of experiences and structured knowledge that humans take for granted.

“Learning systems are mirrors of their environment, and systems cannot bootstrap themselves to learn about anything without connection to an external environment, no matter how intelligent they are.

There needs to be something to learn and predict; what any system can possibly learn is limited by the information it is fed. The type of inputs fed to a given system define its representation space and learning capacity, which for us includes the basic sense data we perceive as colour, smell, taste, sounds, and so on.

For an AI system, these fundamental representation units will be the format of the training and input data, such as word tokens for a language model. Everything that is learned by any system is represented eventually in terms of these fundamental units - these are their bridges to the true structure of reality.”

Right now, AI's mirror of the environment is shallow. By broadening and grounding that input, we can polish the mirror into a true reflection of the world, enabling AI's predictive mind to far surpass our own.

Prediction as the pathway

Intelligence, at its essence, is prediction accurately applied to achieve goals. Evolution built predictive abilities incrementally, neuroscientific theories confirm predictive mechanisms, and AI’s greatest achievements explicitly rely on advanced predictive algorithms.

The final leap for AI, matching human-level intelligence across diverse tasks, lies not in reinventing prediction but in providing richer, grounded experiences and deeper causal understandings. As AI increasingly mirrors biological predictive processes—embodied learning, world modeling, social reasoning—it will inevitably cross the threshold into true general intelligence.

Summary

Deep learning has succeeded in creating intelligent systems by implementing prediction as the core function, with experts arguing this universal approach will apply to all intelligent behaviors

The brain fundamentally works by predicting sensory inputs and outcomes, with intelligence defined as "an agent's ability to achieve goals in a wide range of environments" through accurate predictions

Intelligence evolved in layers (steering, reinforcing, simulating, mentalizing, speaking), each expanding predictive capabilities to solve different survival challenges

Current AI lacks the richly grounded world models humans possess through embodied experience, but providing AI with multimodal, causal understanding will eventually lead to general intelligence

Take for example this analysis by Shane Legg, collecting ten different definitions of intelligence:

“It seems to us that in intelligence there is a fundamental faculty, the alteration or the lack of which, is of the utmost importance for practical life. This faculty is judgement, otherwise called good sense, practical sense, initiative, the faculty of adapting oneself to circumstances.” A. Binet [BS05]

“The capacity to learn or to profit by experience.” W. F. Dearborn quoted in [Ste00]

“Ability to adapt oneself adequately to relatively new situations in life.” R. Pinter quoted in [Ste00]

“A person possesses intelligence insofar as he has learned, or can learn, to adjust himself to his environment.” S. S. Colvin quoted in [Ste00]

“We shall use the term ‘intelligence’ to mean the ability of an organism to solve new problems . . . ” W. V. Bingham [Bin37]

“A global concept that involves an individual’s ability to act purposefully, think rationally, and deal effectively with the environment.” D. Wechsler [Wec58]

“Individuals differ from one another in their ability to understand complex ideas, to adapt effectively to the environment, to learn from experience, to engage in various forms of reasoning, to overcome obstacles by taking thought.” American Psychological Association [NBB+96]

“. . . I prefer to refer to it as ‘successful intelligence.’ And the reason is that the emphasis is on the use of your intelligence to achieve success in your life. So I define it as your skill in achieving whatever it is you want to attain in your life within your sociocultural context — meaning that people have different goals for themselves, and for some it’s to get very good grades in school and to do well on tests, and for others it might be to become a very good basketball player or actress or musician.” R. J. Sternberg [Ste03]

“Intelligence is part of the internal environment that shows through at the interface between person and external environment as a function of cognitive task demands.” R. E. Snow quoted in [Sla01]

“. . . certain set of cognitive capacities that enable an individual to adapt and thrive in any given environment they find themselves in, and those cognitive capacities include things like memory and retrieval, and problem solving and so forth. There’s a cluster of cognitive abilities that lead to successful adaptation to a wide range of environments.” D. K. Simonton [Sim03]

Several theories further unpack how prediction underpins intelligence:

Reinforcement Learning (RL): Systems learn predictions of future rewards, guiding decisions. Sutton and Barto emphasize value functions as core predictive mechanisms (e.g., AlphaGo’s foresight in complex game strategies).

Bayesian Inference: Our brains continuously refine predictions by combining prior beliefs and sensory data, evident in visual illusions and everyday decision-making.

Free Energy Principle: Proposed by Karl Friston, it describes organisms as fundamentally driven to minimize prediction errors, constantly updating internal models to reflect reality (e.g., visual perception stabilizing despite constant eye movements).

Algorithmic Information Theory: Intelligence as efficient compression of complex data into simpler predictive models, as seen in GPT models predicting text by capturing linguistic patterns concisely.

*As you walk down the street, you are not paying attention to the feelings of your feet. But with every movement you make, your neocortex is passively predicting what sensory outcome it expects. If you placed your left foot down and didn’t feel the ground, you would immediately look to see if you were about to fall down a pothole. Your neocortex is running a simulation of you walking, and if the simulation is consistent with sensor data, you don’t notice it, but if its predictions are wrong, you do.

Brains have been making predictions since early bilaterians, but over evolutionary time, these predictions became more sophisticated. Early bilaterians could learn that the activation of one neuron tended to precede the activation of another neuron and could thereby use the first neuron to predict the second. This was the simplest form of prediction. Early vertebrates could use patterns in the world to predict future rewards. This was a more sophisticated form of prediction. Early mammals, with the neocortex, learned to predict more than just the activation of reflexes or future rewards; they learned to predict everything.

The neocortex seems to be in a continuous state of predicting all its sensory data. If reflex circuits are reflex-prediction machines, and the critic in the basal ganglia is a reward-prediction machine, then the neocortex is a world-prediction machine—designed to reconstruct the entire three-dimensional world around an animal to predict exactly what will happen next as animals and things in their surrounding world move.

The point is that human brains have an automatic system for predicting words (one probably similar, at least in principle, to models like GPT-3) and an inner simulation. Much of what makes human language powerful is not the syntax of it, but its ability to give us the necessary information to render a simulation about it and, crucially, to use these sequences of words to render the same inner simulation as other humans around us.”

— Max Bennett, A Brief History Of Intelligence

“Thoughts about o3: I’ll skip the obvious part (extraordinary reasoning, FrontierMath is insanely hard, etc). I think the essence of o3 is about relaxing a single-point RL super intelligence to cover more points in the space of useful problems. The world of AI is no stranger to RL achieving god-level stunts.

AlphaGo was a super intelligence. It beats the world champion in Go — well above 99.999% of regular players.

AlphaStar was a super intelligence. It bests some of the greatest e-sport champion teams on StarCraft.

Boston Dynamics e-Atlas was a super intelligence. It performs perfect backflips. Most human brains don’t know how to send such surgically precise control signals to their limbs.

Similar statement can be made for AIME, SWE-Bench, and FrontierMath — they are like Go, which requires exceptional domain expertise above 99.99….% of average people. o3 is a super intelligence when operating in these domains.

The key difference is that AlphaGo uses RL to optimize for a simple, almost trivially defined reward function: winning the game gives 1, losing gives 0. Learning reward functions for sophisticated math and software engineering are much harder. o3 made a breakthrough in solving the reward problem, for the domains that OpenAI prioritizes. It is no longer an RL specialist for single-point task, but an RL specialist for a bigger set of useful tasks.

Yet o3’s reward engineering could not cover ALL distribution of human cognition. This is why we are still cursed by Moravec’s paradox. o3 can wow the Fields Medalists, but still fail to solve some 5-yr-old puzzles. I am not at all surprised by this cognitive dissonance, just like we wouldn’t expect AlphaGo to win Poker games.

Huge milestone. Clear roadmap. More to do.”

Current models like ChatGPT lack critical components of human intelligence:

Rich world models: Human brains develop extensive models of physical reality, enabling commonsense reasoning. LLMs lack genuine understanding, failing simple physical intuition tasks.

Grounded understanding: Humans learn through embodied interactions; AI mostly learns through static datasets, lacking direct experiential grounding.

Mentalizing and theory of mind: Humans effortlessly infer intentions and beliefs; LLMs rely on statistical patterns, limiting nuanced social understanding.

Continual learning: Biological brains constantly adapt; most AI systems remain static post-training, prone to catastrophic forgetting.